Determiner or Article: A grammatical marker of definiteness (the) or indefiniteness (a, an).

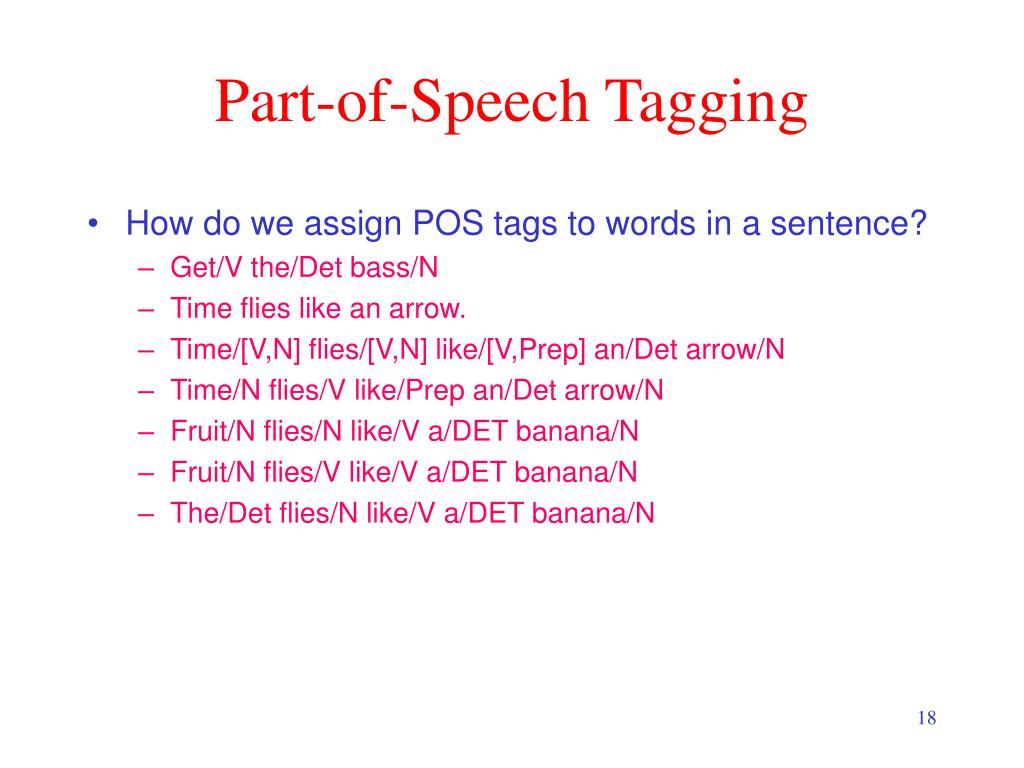

Interjection: An interjection is a word used to express emotion. Conjunction: A conjunction joins words, phrases, or clauses. Preposition: A preposition is a word placed before a noun or pronoun to form a phrase modifying another word in the sentence. Adverb: An adverb modifies or describes a verb, an adjective, or another adverb. Adjective: An adjective modifies or describes a noun or pronoun. Pronoun: A pronoun is a word used in place of a noun. Noun: A noun is the name of a person, place, thing, or idea. The Butte College introduces the following definitions: There are eight (sometimes nine ) different parts of speech in English that are commonly defined. While POS tags are used in higher-level functions of NLP, it’s important to understand them on their own, and it’s possible to leverage them for useful purposes in your text analysis. In Natural Language Processing (NLP), POS is an essential building block of language models and interpreting text. Part of Speech (POS) is a way to describe the grammatical function of a word. Lets test our classifier, def pos_tag(sentence): tags = clf.predict() return zip(sentence, tags) print pos_tag(word_tokenize('This is my friend, Manish.Photo by Alexandre Pellaes on Unsplash What is a Part of Speech? This isn’t much bad accuracy for a decision tree classifier on such data. Now, we test our model’s accuracy… print "Accuracy:", clf.score(X_test, y_test) Next, X_test, y_test = transform_to_dataset(test_sentences) It takes a fair bit 🙂 Here, we are done with the training part. classifier=Pipeline() classifier.fit(X, y) # Use only the first 10K samples if you're running it multiple times. from ee import DecisionTreeClassifier from sklearn.feature_extraction import DictVectorizer from sklearn.pipeline import Pipeline # w e will create a pipeline including DictVectorizer & our classifier.It's easier that way round. We can experiment with n number of classifiers here. Here, we are all set to train the classifier which is DECISION_TREE_CLASSIFIER here. Implementation of it is as from sklearn.feature_extraction import DictVectorizer # Fit our DictVectorizer with our set of features dict_vectorizer = DictVectorizer(sparse=False) dict_vectorizer.fit(X,y) To proceed, sklearn has a built-in function called DictVectorizer which provides a straightforward way to do that. Now, our neural network takes vectors as inputs, so we need to convert our dictionary features to vectors.

part=int(.75 * len(tagged_sentences)) training_sentences = tagged_sentences test_sentences = tagged_sentences def transform_to_dataset(tagged_sentences): X, y =, for tagged in tagged_sentences: for index in range(len(tagged)): X.append(features(untag(tagged), index)) y.append(tagged) return X, y X, y = transform_to_dataset(training_sentences) 75*len(tagged_sentences) to train and rest for testing. Now, as in machine learning, we need to split the dataset for training and testing. We remove the tag for each tagged term def untag(tagged_sentence): return def features(sentence, index): //sentence:, index: the index of the word return But we can think like, the 2-letter suffix is a great indicator of past-tense verbs, ending in ‘ed’, 3-letter suffix helps to recognize the present participle ending in ‘ ing‘. These properties could include pieces of information about previous and next words as well as prefixes and suffixes. For each term, we create a dictionary of features depending on the sentence where the term has been extracted from. This turns out to be a multi-class classification problem with more than forty different classes. (term,tag) as print "Tagged sentences: ", len(tagged_sentences) print "Tagged words:", len(_words()) # Tagged sentences: 3914 # Tagged words: 100676 Loading the tagged sentences… from rpus import treebank sentences = treebank.tagged_sents(tagset='universal') import random print(random.choice(sentences))

Parts of speech tagger download#

So, let’s kick off our 1st part….įirst of all, we download the annotated corpus: import nltk nltk.download('treebank')

Parts of speech tagger series#

Though there are various methods to do POS tagging with Ai, we will divide this series in a trio, PART-1 ( using decision trees), PART-2 ( using crf-conditional random field), PART-3( using LSTMs/GRUs). from nltk import word_tokenize, pos_tag print pos_tag(word_tokenize("I'm learning NLP")) # NLTK default tagger, Stanford CoreNLP tagger, Penn Treebank, etc.

We also need a tag set for our machine learning, deep learning models. It simply implies labelling words with their appropriate Part-Of-Speech as a noun, verb, etc. POS tagging is one of the main components of almost any Natural Language analysis. In today’s evolving field of Ai, Artificial neural networks have been applied successfully to compute POS tagging with great performance.

0 kommentar(er)

0 kommentar(er)